Innovation isn’t a single act, it’s an ongoing process of battling against what’s already established. Change is often not noticeable until it’s too late. The attacker’s advantage is the ability to blindside incumbents.

***

If you believe Thomas Kuhn’s theory outlined in The Structure of Scientific Revolutions, then the pace of change happens slowly at first and then all at once.

Innovation: The Attacker’s Advantage, an out-of-print book from 1984 takes a timeless look at this theory and applies it to innovation. This is the Innovator’s Dilemma long before the innovator’s dilemma.

The perspective of Richard Foster, the book’s author, is that there is a battle going on in the marketplace between innovators (or attackers) and defenders (who want to maintain their existing advantage).

Some companies have more good years than bad years. What’s the secret behind their success? Foster argues it’s their willingness to cannibalize “their current products and processes just as they are the most lucrative and begin the search again, over and over.

It is about the inexorable and yet stealthy challenge of new technology and the economics of sub situation which force companies to behave like the mythical phoenix, a bird that periodically crashed to earth in order to rejuvenate itself.

The book isn’t about improving process but rather changing your mindset. This is the Attacker’s Advantage.

Henry Ford understood this mindset. In My Life and Work, he wrote,

If to petrify is success, all one has to do is to humor the lazy side of the mind; but if to grow is success, then one must wake up anew every morning and keep awake all day. I saw great businesses become but the ghost of a name because someone thought they could be managed just as they were always managed, and though the management may have been most excellent in its day, its excellence consisted in its alertness to its day, and not in slavish following of its yesterdays. Life, as I see it, is not a location, but a journey. Even the man who most feels himself ‘settled’ is not settled—he is probably sagging back. Everything is in flux, and was meant to be. Life flows. We may live at the same number of the street, but it is never the same man who lives there.

[…]

It could almost be written down as a formula that when a man begins to think that he at last has found his method, he had better begin a most searching examination of himself to see whether some part of his brain has not gone to sleep.

Foster recognizes that innovation is “born from individual greatness” but exists within the context of a marketplace where the S-curve dominates and questions such as “how much change is possible, when it will occur, and how much it will cost,” are critical factors.

Companies are often blindsided by change. Everything is profitable until it isn’t. But leading companies are supposed to have an advantage. Or, are “the advantages outweighed by other inherent disadvantages?” Foster argues this is the case.

The roots of this failure lie in the assumptions behind the key decisions that all companies have to make. Most of the managers of companies that enjoy transitory success assume that tomorrow will be more or less like today. That significant change is unlikely, is unpredictable, and in any case will come slowly. They have thus focused their efforts on making their operations ever more cost effective. While valuing innovation and espousing the latest theories on entrepreneurship, they still believe it is a highly personalized process that cannot be managed or planned to any significant extent. They believe that innovation is risky, more risky than defending their present business.

Some companies make the opposite assumption. They assume tomorrow does not resemble today.

They have assumed that when change comes it will come swiftly. They believe that there are certain patterns of change which are predictable and subject to analysis. They have focused more on being in the right technologies at the right time, being able to protect their positions, and having the best people rather than on becoming ever more efficient in their current lines of business. They believe that innovation is inevitable and manageable. They believe that managing innovation is the key to sustaining high levels of performance for their shareholders. They assume that the innovators, the attackers, will ultimately have the advantage, and they seek to be among those attackers, while not relinquishing the benefits of the present business which they actively defend. They know they will face problems and go through hard times, but they are prepared to weather them. They assume that as risky as innovation is, not innovating is even riskier.

These beliefs are based on a different understanding of competition.

The S-Curve

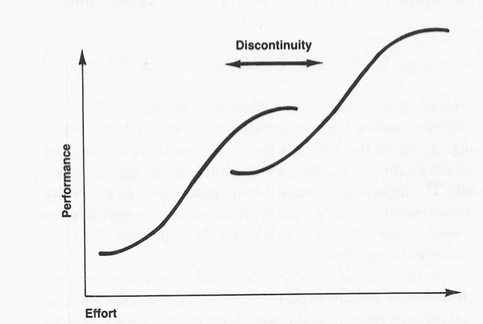

The S-curve is a graph of the relationship between the effort put into improving a product or process and the results one gets back for the investment. It’s called the S-curve because when the results are plotted, what usually appears is a sinuous line shaped like an S, but pulled to the right at the top and pulled to the left at the bottom.

Initially, as funds are put into developing a new product or process, progress is very slow. Then all hell breaks loose as the key knowledge necessary to make advances is put in place. Finally, as more dollars are put into the development of a product or process, it becomes more and more difficult and expensive to make technical progress. Ships don’t sail much faster, cash registers don’t work much better, and clothes don’t get much cleaner. And that is because of limits at the top of the S-curve.

Limits are the key to understanding the S-curve in the innovation context. When we approach a limit, “we must change or not progress anymore.” Management’s ability to recognize limits and change course becomes key.

If you are at the limit, no matter how hard you try you cannot make progress. As you approach limits, the cost of making progress accelerates dramatically. Therefore, knowing the limit is crucial for a company if it is to anticipate change or at least stop pouring money into something that can’t be improved. The problem for most companies is that they never know their limits. They do not systematically seek the one beacon in the night storm that will tell them just how far they can improve their products and processes.

Foster argues that if you don’t understand limits and S-curves, you get blindsided by change. I think that’s too neat of an argument — you can understand limits and S-curves and still get blindsided, but the odds are reduced. You can think of the S-curve as the blindsided curve or the attacker’s curve, depending on your perspective.

For the S-curve to have practical significance there must be technological change in the wind. That is, one competitor must be nearing its limits, while others, perhaps less experienced, are exploring alternative technologies with higher limits. But this is almost always the case. I call the periods of change from one group of products or processes to another, technological discontinuities. There is a break between the S-curves and a new one begins to form. Not from the same knowledge that underlays the old one but from an entirely new and different knowledge base.

I think this argument is starting to sound a lot like the Innovator’s Dilemma but 15 years sooner.

Technological discontinuities are arriving with increasing frequency because we’re in the early stages of the technological revolution. Eventually, these developments will revert to the mean, and disruptive innovation will become less frequent and incremental innovation more common. Disruptive innovation favors the attacker, whereas incremental favors the incumbent — going from Zero to One will be harder.

As limits are approached, incremental improvement becomes increasingly expensive.

At the same time, the possibility of new approaches often emerges—new possibilities that frequently depend on skills not well developed in leader companies. As these attacks are launched, they are often unnoticed by the leader, hidden from view by conventional economic analysis. When the youthful attacker is strong he is quite prepared for battle by virtue of success and training in market niches. The defender, lulled by the security of strong economic performance for a long time and by conventional management wisdom that encourages him to stay his course, and buoyed by faith in evolutionary change, finds it’s too late to respond. The final battle is swift and the leader loses.

This means the standard “stick to your knitting” argument becomes contextual and thus psychologically difficult. Sometimes the best strategy may be to move to something unfamiliar. I’d argue that the competitive drive for efficiency makes a lot of companies increasingly fragile. Most dangerous of all, they are blind to their fragility.

The S-curve, limits and attacker’s advantages are at the heart of these problems and they also provide the key to solving them. For example, there are people, call them limitists, who have an unusual ability to recognize limits and ways around them. They ought to be hired or promoted. There are others who can spot ways to circumvent limits by switching to new approaches. They are essential too. Imaginary products need to be designed to understand when a competitive threat is likely to become a reality. Hybrid products that seem to be messy assemblages of old and new technologies (like steam ships with sails) can sometimes be essential for competitive success. Companies can set up separate divisions to produce new technologies and products to compete with old ones. S- curves can be sketched and used to anticipate trouble.

None of this is easy. And it won’t happen unless the chief executive replaces his search for efficiency with a quest for competitiveness.

[…]

Most top executives understand, I think, that technological change is relevant to them and that it is useless and misleading to label their business as high-tech or low-tech. What they don’t have is a picture of the engines of the process by which technology is transformed into competitive advantage and how they can thus get their hands on the throttle.

“If change occurs at the time learning starts to slow,” wrote Phillip Moffitt in a 1980s Esquire article entitled The Dark Side of Excellence, “… then there is a chance to avoid the dramatic deterioration. If we call this the ‘observation point,’ when you can see the past and the future, then there is time to reconsider what one is doing.”

Understanding Limits

Limits are important because of what they imply for the future of the business. For example, we know from the S-curve that as the limits are approached it becomes increasingly expensive to carry out further development. This means that a company will have to increase its technical expenditures at a more rapid pace than in the past in order to maintain the same rate of progress of technical advance in the marketplace, or it will have to accept a declining rate of progress. The slower rate of change could make the company more vulnerable to competitive attack or presage price and profit declines. Neither option is very attractive; they both signal a tougher environment ahead as the limits are approached. Being close to the limits means that all the important opportunities to improve the business by improving the technology have been used. If the business is going to continue to grow and prosper in the future, it will have to look to functional skills other than technology—say marketing, manufacturing or purchasing. Said another way, as the limits of a technology are reached, the key factors for success in the business change. The actions and strategies that have been responsible for the successes of the past will no longer suffice for the future. Things will have to change. Discontinuity is on the way. It is the maturing of a technology, that is the approach to a limit, which opens up the possibility of competitors catching up to the recognized market leader. If the competitors better anticipate the future key factors for success, they will move ahead of the market leaders.

[…]

If one knows that the technology has little potential left, that it will be expensive to tap, and that another technology has more potential (that is, is further from its limits), then one can infer that it may be only a matter of time before a technological discontinuity erupts with its almost inevitable competitive consequence.

Thus finding the limit becomes important.

Finding the Limit

All this presumes we know the answer to the question “Limits of what?” The “what,” as Owens Corning expressed it, was the “technical factors of our product that were most important to the customer.” The trick is relating these “technical factors,” which are measurable attributes of the product or process to the factors that customers perceive as important when making their purchase decision. This is often easy enough when selling products to sophisticated industrial users because suppliers and customers alike have come to focus on these variables, for example, the specific fuel consumption of a jet engine or the purity of a chemical. But it is much tougher to understand these relationships in the consumer arena. How does one measure how clean our clothes are? Do we do it the same way at home as the scientists do in the lab? Do we really measure “cleanness,” or its “brightness” or a “fresh smell” or “bounce?” All of these are attributes of “clean” clothes which may have nothing whatsoever to do with how much dirt is in the clothes. … These are complicated questions to answer because different consumers will feel differently about these factors, creating confusion in the lab. Further, once the consumer has expressed his preference it may be difficult to measure that preference in technical terms. For example, what does “fit” mean? What are the limits of “fit”? If the attribute that consumers want cannot be expressed in technical terms, clearly its limit cannot be found.

Further complicating the seemingly simple question of “limits of what?” is the realization that the consumer’s passion for more of the attribute may be a function of the levels of the attribute itself.

For example, in the detergent battles of the 1950s, P&G and its competitors were all vying to make a product that would produce the “cleanest” clothes. It was soon discovered that in fact the clothes were about as clean as they could ever get. The dirt had been removed, but the clothes often had acquired a gray, dingy look that the consumer associated with dirt. In fact, the gray look was caused by torn and frayed fibers, but the consumer did not appreciate this apparently arcane technical detail. Rather than fight with consumers P&G decided to capitalize on their misperceptions and add “optical brighteners” to the detergent. These are chemicals that reflect light. When they were added to the detergent and were retained on the clothes, they made the clothes appear brighter and therefore cleaner in the consumer’s eyes, even though in the true sense they weren’t any cleaner.

The consumers loved it, and bought all the Tide they could get in order to get their clothes “clean,” that is optically bright.

[…]

Another complication with performance parameters is that they keep changing. Frequently this change is due to the consumer’s satisfaction with the present levels of product performance; optical brightness in our prior example. This often triggers a change in what customers are looking for. No longer will they be satisfied with optical brightness alone; now they want “bounce” or “fresh smell,” and the basis of competition changes. These changes can be due to a change in the social or economic environment as well. For example, new environmental laws (which led to biodegradable detergents), a change in the price of energy, or the emergence of a heretofore unavailable competitive product like the compact audio disc or high-definition TV. These changes in performance factors should trigger the establishment of new sets of tests and standards for the researchers and engineers involved in new product development. But often they don’t. They don’t because these changes are time-consuming and expensive to make, and they are difficult to think through. Thus it often appears easier to just not make the change. But, of course, this decision carries with it potentially significant competitive risks.

The people that should see these changing preferences, the salesmen, often do not because they have a strong incentive to sell today’s products. So the very people that the organization has put into place to stay close to the customer often fail to keep the organization informed of a changing landscape. And if they do, it’s still a complicated process to get companies to act on that information.

… The people we rely on to keep us close to the customer and new developments often do not. So our structure and systems work to confirm our disposition to keep doing things the same way. As Alan Kantrow, editor at the Harvard Business Review, puts it, “Our receptor sites are carrying the same chemical codes that we carry. We are thus likely to see only what we expect and want to see.” The chief executive says, “I’ve done good things. We’re scanning our environment.” But in fact he is scanning his own mind

Even if sales and marketing do perceive the need for change, they may not take their discovery back to their technical departments for consideration. If the technical departments do hear about these developments, they may not be able to do much about them because of the press of other projects. So all in all, changes in customer preferences get transmitted slowly, usually only after special studies are done specifically to examine changing customer preferences. All this means that answering the “limits of what” question can be tricky under the best of circumstances, and much tougher in an ongoing business.

There are limits to limits, of course. First, just because you’re approaching a limit doesn’t mean there is an effective substitute that can solve the problem better. However, “if there is an alternative, and it is economic, then the way the competitors do battle in the industry will change.” Second, it’s possible to be wrong about limits and thus draw the wrong conclusions.

A great example of this is Simon Newcomb, the celebrated astronomer, who in 1900 said “The demonstration that no possible combination of known substances, known forms of machinery and known forms of force, can be united in a practical machine by which men shall fly long distances through the air, seems to the writer as complete as it is possible for the demonstration to be.” Two years later, he clarified, “Flight by machines heavier than air is unpractical and insignificant, if not utterly impossible.” It wasn’t even a year before the Wright brothers proved him wrong at Kitty Hawk.

Diminishing Returns

One mistake we make is to confuse time and effort.

It is not the passage of time that leads to progress, but the application of effort. If we plotted our results versus time, we could not by extrapolation draw any conclusion about the future because we would have buried in our time chart implicit assumptions about the rate of effort applied. If we were to change this rate, it would increase or decrease the time it would take for performance to improve. People frequently make the error of trying to plot technological progress versus time and then find the predictions don’t come to pass. Most of the reason for this is not the difficulty of predicting how the technology will evolve, since we have found the S-curve to be rather stable, but rather predicting the rate at which competitors will spend money to develop the technology. The forecasting error is a result of bad competitive analysis, not bad technology analysis.

Thus, it might appear that a technology still has a great potential but in fact what is fuelling its advance is rapidly increasing amounts of investment.

Psychologically, we believe the more effort we put in, the more results we should see. This has disastrous effects in organizations unable to recognize limits.

Often there is more than one S-curve; the gap between them represents a discontinuity.

Efficiency Versus Effectiveness

Effectiveness is set when a company determines which S-curve it will pursue (e.g., vacuum tubes or solid state). Efficiency is the slope of the present curve. Effectiveness deals with sustaining a strategy-efficiency with the present utilization of resources. Moving into a new technology almost always appears to be less efficient than staying with the present technology because of the need to bring the new technology up to speed. The cost of progress of an established technology is compared with that of one in its infancy, even though it may eventually cost much less to bring the new technology up to the state of the art than it did to bring the present one there. To paraphrase a comment I’ve heard many times at budget meetings: “In any case the new technology development cost is above and beyond what we’re already paying. Since it doesn’t get us any further than we presently are, it cannot make sense.” The problem with that argument is that someday it will be ten or twenty or thirty times more efficient to invest in the new technology, and it will outperform the existing technology by a wide margin.

There are many decisions that put effectiveness and efficiency at odds with each other, particularly those involving resource allocation. This is one of the toughest areas to come to grips with because it means withdrawing resources from the maturing business.

[…]

In addition, many companies have management policies that, interpreted literally, impede moving from one S-curve to another. For example, “Our first priority will be to protect our existing businesses.” Or “We will operate each business on a self-sustaining basis; each will have to provide its own cash as well as make a contribution to corporate overhead.” These rules are established either in a period of relaxed competition or out of political necessity.

The fundamental dilemma is that it always appears to be more economic to protect the old business than to feed the new one at least until competitors pursuing the new approach get the upper hand. Conventional financial theory has no practical way to take account of the opportunity cost of not investing in the new technology. If it did, the decision to invest in the present technology would often be reversed.

Metrics become distorted, and defenders believe they are more productive than they are. Attackers and defenders look at productivity differently.

Even if a defender succeeds in managing his own S-curve better, chances are he will not be able to raise his efficiency by more than, say, 50 percent. Not much use against an attacker whose productivity might be climbing ten times faster because he has chosen a different S-curve. All too frequently the defender believes his productivity is actually higher than his attacker’s and ignores what the attacker potentially may have to offer the customer. Defenders and attackers often have a different perspective when it comes to judging productivity. For the attacker, productivity is the improvement in performance of his new product over his old product divided by the effort he puts into developing the new product. If his technology is beginning to approach the steep part of its S-curve, this could be a big number. The defender, however, observes the productivity through the eyes of the market, which may still be treating the new product as not much more than a curiosity. So in his eyes the attacker’s productivity is quite low. We’ve seen this happen time and again in the electronics industry. Products such as microwaves, audio cassettes and floppy discs failed at first to meet customer standards, but then, almost overnight, they set new high-quality standards and stormed the market.

Even if the defender admits that the attacker’s product may have an edge, he is likely to say it is too small to matter. Since the first version of a wholly new product is frequently just marginally better than the existing product, the defender often thinks the attacker’s productivity is lower, not higher than his own. The danger comes in using this erroneous perception to figure out what is going to happen next. Too often defenders err by thinking that the attacker’s second generation new product will require enormous resources and result in little progress. We know differently. We know from the mathematics of adolescent S-curves that once the first crack appears in the market dam, the flood cannot be far behind. And further, it won’t cost nearly as much since the first product has absorbed much of the start-up costs. No doubt this will be a big shock to the defender who will tell the stock market analysts, “Well, the attacker was just lucky. There was nothing in his record to suggest he could have pulled this thing off.” All true. From the defender’s viewpoint there was nothing in the attacker’s record to suggest that a change was coming. But the underlying forces were at work nevertheless, and in the end they appeared.

Innovation: The Attacker’s Advantage explores why leaders lose and what you can do about it.